What is SuperPlan.md?

Most teams building AI agents are shipping a workflow they would never accept for human engineers.

A product decision gets made in a chat thread. A constraint is mentioned once in a standup. Someone drops a partial spec into a prompt. The agent runs, generates something “reasonable” and the team moves on. A week later the output drifts, someone re-prompts, and now there are two competing versions of “the plan” floating around, like the one you think you agreed on and the one the system is currently executing.

That failure pattern isn’t a temporary LLM quality issue. It’s a predictable outcome of how we’ve glued LLMs into workflows: implicit planning, chat-based decision making, and execution-first agents. If this sounds familiar, it should. It’s the default.

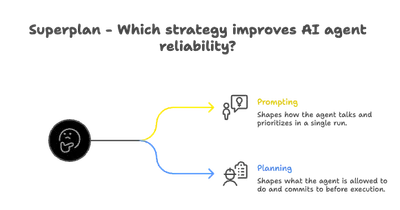

Superplan exists because “prompting harder” doesn’t fix this. Superplan is a planning substrate for AI-driven work, a layer that sits between raw ideas and execution, forces decisions to become explicit and turns planning into something you can inspect, review and carry forward as projects evolve. It doesn’t compete with agents, MCP tools, or copilots. It makes them behave.

The real failure modes

The common thread across most agent failures is not “hallucinations”. It’s missing intent.

When planning stays implicit, your system is effectively doing requirements inference in production.

That shows up as:

You plan in a chat then execute in an agent.

Chats are great for exploration. They are terrible as a source of truth. There’s no stable structure, no guarantees about what’s “final” and no obvious boundary between a brainstorm and a decision. The moment you run an agent off that context, you’re baking ambiguity into execution.

You ship execution-first agents.

A lot of agent stacks are “goal → tool calls → explanation”. The explanation often reads like reasoning, but it’s just a narration of what already happened. If the agent picks the wrong approach early, everything downstream can be locally coherent and globally wrong. This is how you get confident-but-off-track outputs that look reviewable but aren’t anchored to agreed constraints.

You store decisions in prompts, not artifacts.

Most teams treat prompts like code, but without versioning discipline. The “plan” becomes a long instruction blob that changes silently every time someone edits it. When something breaks, you can’t diff intent. You can only guess which prompt mutation caused the drift.

If any of these are your current workflow, the uncomfortable truth is that your system is not failing at generation. It’s failing at governance of intent.

A concrete mental model

Treat a plan the way you treat a pull request.

A pull request is inspectable. It has a diff. It captures decisions and tradeoffs. It can be reviewed before merge. It becomes the unit of collaboration, not the final binary.

Superplan applies that mental model to AI execution. A plan is not “helpful text the model wrote.” It’s a structured, versionable artifact that exists before any agent runs.

The plan specifies:

What we’re trying to do.

What we will not do.

What constraints must hold.

What context is relevant.

What decisions are already made.

What steps are allowed to execute.

Then, and only then, agents and tools run against that plan.

This is the inversion most AI systems are missing. Without it, you’re asking the model to simultaneously interpret intent, invent structure, and execute. That’s not intelligence. That’s improvisation under uncertainty.

What breaks over time when planning stays implicit

Early on, implicit planning feels fast. You can “just try it.” Then the project grows and the cost shows up, not as a single dramatic failure, but as chronic drag:

Context rot.

The original intent is spread across chats, docs, and prompt history. New teammates can’t tell what matters. Agents inherit a blended, outdated worldview and keep reintroducing old assumptions.

Decision amnesia.

The same arguments happen again because nothing is recorded in a durable format. You don’t have “decision memory” you have “who remembers the last thread.”

Silent constraint erosion.

A constraint mentioned once (“don’t touch billing”, “must be reversible”, “no migrations this sprint”) disappears from the execution path. Weeks later, you’re debugging a failure that is technically correct relative to the latest prompt, but incorrect relative to the system you meant to build.

Non-deterministic iteration.

You end up spending tokens to rediscover clarity. The system produces output, you correct it, it learns nothing, and the cycle repeats. People call this “agent iteration.” Most of the time it’s just rework caused by missing plan boundaries.

None of that is solved by a better model. These are workflow failures. They require a workflow primitive.

Superplan as a planning substrate

Superplan is not a chat UI, not a copilot, and not a “smarter agent.” It’s infrastructure for making planning explicit and portable across tools.

Instead of treating planning as an afterthought hidden inside prompts, Superplan treats it as the substrate that agents execute against. It sits between raw ideas and execution so the system can’t skip the step where humans would normally be careful.

Practically, that means Superplan forces you to surface the things teams usually leave implicit until it hurts:

Structured context, not an unbounded paste.

You don’t win reliability by stuffing more text into the prompt. You win it by deciding what is relevant and why. Superplan centralizes context so an agent doesn’t have to guess which doc, ticket, or note is authoritative.

Explicit decisions, not vibes.

A surprising number of failures come from unstated choices: which approach is acceptable, which tradeoff is preferred, what done means. Superplan makes these choices visible so they can be agreed on before execution, not reverse-engineered afterward.

Constraints and goals that survive iteration.

Projects evolve. Teams change. The plan needs to outlive today’s chat thread. Superplan preserves decision memory so execution stays grounded even as context changes.

Superplan doesn’t make AI smarter. It makes AI disciplined.

That thesis is intentionally boring. Because the real problem is boring, most agent systems are executing on uncommitted intent.

That reaction is the point. If a workflow can’t tolerate explicit planning, it usually means the plan was never stable enough to execute safely.

How execution changes once agents call real tools

Everything above matters even when agents are only reading data. It becomes unavoidable once agents start calling real tools.

Many modern agent systems now use MCP style execution. Models do not just suggest actions anymore. They call APIs. They modify infrastructure. They write data. They trigger workflows across services. At this point ambiguity stops being theoretical. A missing constraint is no longer just a wrong answer. It becomes a bad deploy. It becomes a broken integration. It becomes corrupted state.

Superplan does not sit inside these execution systems and it does not replace them. It sits above them. It defines intent before execution happens. When agents act they act on decisions that were already made. Not on assumptions pulled from scattered context. MCP makes the cost of skipping planning obvious. Superplan exists to catch that cost before it reaches production.

Who this is for (and who it isn’t)

Superplan is for teams where AI execution has consequences:

- Senior engineers building AI agents that call real tools (APIs, codegen,

infra, data writes).

- Founders running MCP based workflows where drift creates product risk.

- Teams who need reproducibility, reviewability, and alignment across time

and people.

- Organizations that need reproducibility, reviewability, and decision continuity

across time, people, and iterations.

It is not for casual users experimenting with prompts, one-off content generation or hobby projects where close enough is fine. Superplan adds discipline. If you don’t need discipline, you don’t need a planning substrate.

If you already have reliable agents without a real planning layer, you’re either unusually disciplined in your process, or you’re borrowing discipline from humans and calling it “agent autonomy.” The second option stops scaling the moment the system becomes important.